The EU has introduced the Artificial Intelligence Act, a highly laudable first step toward regulating the AI.

It was unveiled on 1 August 2024 and will be fully applicable in two years.

The dedicated website ‘EU Artificial Intelligence Act’ provides the updates on the latest developments and offers information on a number of related issues:

https://artificialintelligenceact.eu/

Shaping Europe’s Digital Future, an official website of the EC, provides useful information about the AI Act, and in doing so, answers some important questions:

We are producing here a summarized version:

AI Act

The AI Act is the first-ever legal framework on AI, which addresses the risks of AI and positions Europe to play a leading role globally.

The AI Act (Regulation (EU) 2024/1689 laying down harmonised rules on artificial intelligence) provides AI developers and deployers with clear requirements and obligations regarding specific uses of AI. At the same time, the regulation seeks to reduce administrative and financial burdens for business, in particular small and medium-sized enterprises (SMEs).

Why do we need rules on AI?

The AI Act ensures that Europeans can trust what AI has to offer. While most AI systems pose limited to no risk and can contribute to solving many societal challenges, certain AI systems create risks that we must address to avoid undesirable outcomes.

For example, it is often not possible to find out why an AI system has made a decision or prediction and taken a particular action. So, it may become difficult to assess whether someone has been unfairly disadvantaged, such as in a hiring decision or in an application for a public benefit scheme.

Although existing legislation provides some protection, it is insufficient to address the specific challenges AI systems may bring.

The new rules:

- address risks specifically created by AI applications

- prohibit AI practices that pose unacceptable risks

- determine a list of high-risk applications

- set clear requirements for AI systems for high-risk applications

- define specific obligations deployers and providers of high-risk AI applications

- require a conformity assessment before a given AI system is put into service or placed on the market

- put enforcement in place after a given AI system is placed into the market

- establish a governance structure at European and national level

A risk-based approach

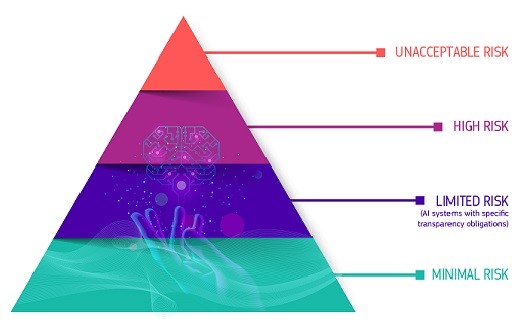

The Regulatory Framework defines 4 levels of risk for AI systems:

pyramid showing the four levels of risk: Unacceptable risk; High-risk; limited risk, minimal or no risk

All AI systems considered a clear threat to the safety, livelihoods and rights of people are banned, from social scoring by governments to toys using voice assistance that encourages dangerous behaviour.

High risk

AI systems identified as high-risk include AI technology used in:

- critical infrastructures (e.g. transport), that could put the life and health of citizens at risk

- educational or vocational training, that may determine the access to education and professional course of someone’s life (e.g. scoring of exams)

- safety components of products (e.g. AI application in robot-assisted surgery)

- employment, management of workers and access to self-employment (e.g. CV-sorting software for recruitment procedures)

- essential private and public services (e.g. credit scoring denying citizens opportunity to obtain a loan)

- law enforcement that may interfere with people’s fundamental rights (e.g. evaluation of the reliability of evidence)

- migration, asylum and border control management (e.g. automated examination of visa applications)

- administration of justice and democratic processes (e.g. AI solutions to search for court rulings)

High-risk AI systems are subject to strict obligations before they can be put on the market:

- adequate risk assessment and mitigation systems

- high quality of the datasets feeding the system to minimise risks and discriminatory outcomes

- logging of activity to ensure traceability of results

- detailed documentation providing all information necessary on the system and its purpose for authorities to assess its compliance

- clear and adequate information to the deployer

- appropriate human oversight measures to minimise risk

- high level of robustness, security and accuracy

All remote biometric identification systems are considered high-risk and subject to strict requirements. The use of remote biometric identification in publicly accessible spaces for law enforcement purposes is, in principle, prohibited.

A solution for the trustworthy use of large AI models

More and more, general-purpose AI models are becoming components of AI systems. These models can perform and adapt countless different tasks.

While general-purpose AI models can enable better and more powerful AI solutions, it is difficult to oversee all capabilities.

There, the AI Act introduces transparency obligations for all general-purpose AI models to enable a better understanding of these models and additional risk management obligations for very capable and impactful models. These additional obligations include self-assessment and mitigation of systemic risks, reporting of serious incidents, conducting test and model evaluations, as well as cybersecurity requirements. /// nCa, 13 August 2024